What is LiDAR? (& Why is It on Apple Devices All of a Sudden)

If you’ve seen some of Apple’s new commercials around the iPad Pro, you’ve undoubtedly seen them touting their new LiDAR sensor on it and maybe you wondered, just for a sec: what is LiDAR?

Well, in this Decodr episode, my explainer series here on the channel, let’s talk about this clever little measurement tool.

What is LiDAR?

So, firstly LiDAR isn’t new and has been around for a while.

It’s mainly used by scientists, usually in the form of lidar drones, to examine the surface of the earth and it’s used in conjunction with other sensors on various autonomous cars to help them better sense objects around them.

LiDAR stands for Light Detection and Ranging and is similar to radar just using light instead of radio waves (radar stands for radio detection and ranging, by the way).

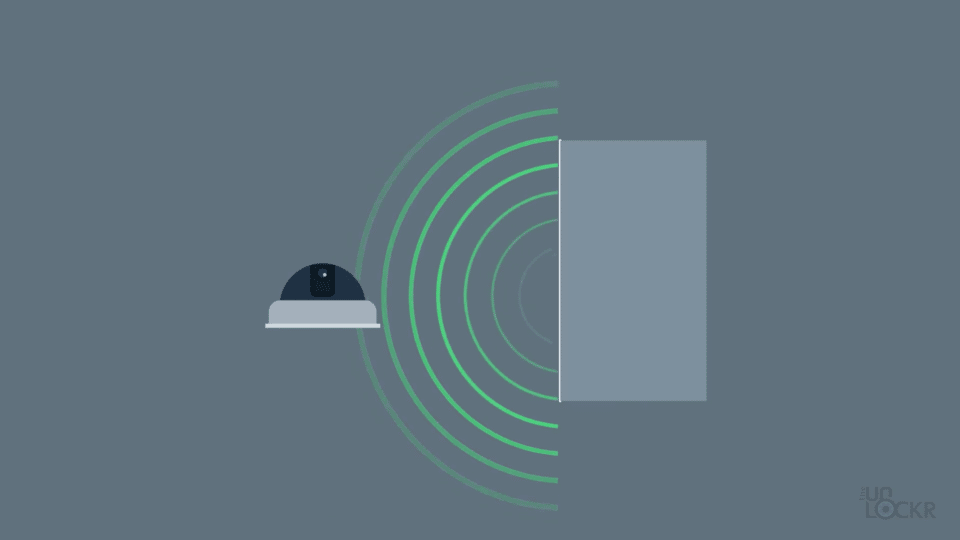

How lidar works is that it fires out rapid pulses of laser light in quick succession (lasers being super focused wavelengths of light). That light then bounces off objects in front of it and returns to the lidar sensor. The time it takes for the light to make that round trip is then calculated and used to create a 3D model of the object that can be used for measuring distance to the object, height, and shape of the object, etc.

LiDAR vs RADAR

Radar, on the other hand, does the same but with radio waves. So it sends out radio waves they bounce off objects and then the time it takes for them to return is measured to determine the distance and position of the objects, etc.

Now, one of the major differences between lidar vs radar is that lidar is a lot more accurate and can create more defined 3D models and looks sort of like this.

Radar, on the other hand, sees objects in more of blocks, kind of like this.

Because of that, LiDAR can be used to more easily determine things like say the way a person is facing, a hand from a face, the number of branches on a tree, etc. where radar would just have a much harder time doing so.

On the flip side though, radar has a much further range than lidar and is much cheaper to use. It also isn’t as affected by rain, fog, snow, etc. like lidar can be since those things can interfere with light.

Generally speaking, either of these systems is used in conjunction with GPS, ultrasonic sensors, and cameras when talking about autonomous cars and lidar drones and planes to sort of round out the systems.

Why Are We Seeing LiDAR Sensors Now?

And now why are starting to see it in consumer electronics? Well, time of flight, or ToF, systems have been making their way onto phones to help with AR as well as things like portrait mode, focusing, etc. for a while now. And technically time of flight is the method used to measure the time of flight of the light to and object and back in this case to determine the distance, etc. and LiDAR is the sensor being used.

So if you are familiar with Samsung, Oppo, Motorola, and all the other manufacturers you’ll know that they have also had 3D ToF sensors on their devices for a while now, you’re probably wondering then what is the difference between the Apple LiDAR one and theirs?

LiDAR vs Time of Flight?

It’s safe to say that Android’s ToF camera system and Apple’s new ToF LiDAR sensor are technically both using infrared light being bounced off objects to determine the distance, to help with the aforementioned depth information, AR, etc. The difference between them though is that Apple uses a scanning system that scans the area with multiple points and the others use a scanner-less system of the others which uses a flash of infrared light.

Regardless of the method, the software has to take all of the reflections and determine which of them are indirect and off-angle ones and remove those from the calculations and then crunch the numbers on the reflections it cares about. This whole process is simply harder to do with a single flash vs the multiple points.

The downside to LiDAR in this application, however, is the same as with most new technologies, it’s more expensive, of course, and the fact that the only real use cases right now for it are 3D effects like portrait mode and AR applications–which of course haven’t quite taken off in the general public’s eye. But as these new technologies become more prevalent in all our devices maybe we’ll see new use cases that will be able to benefit from it. Personally, I’ve seen some people put together autofocus systems using LiDAR and time of flight and would love to see more accurate autofocus come out of it. We’ll see.

And there you go. Let me know what you guys think in the comments below.